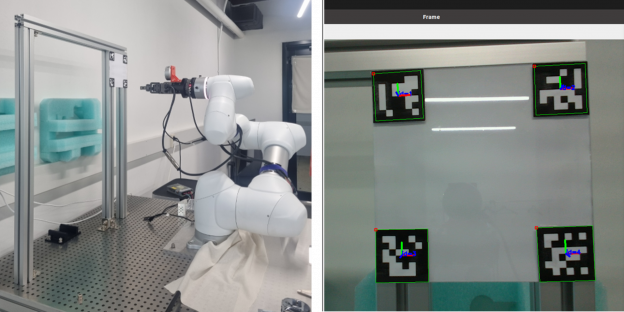

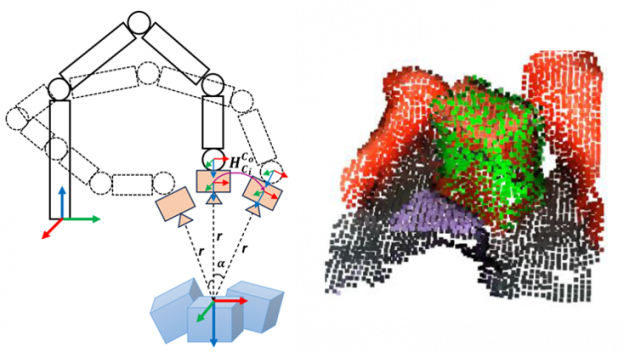

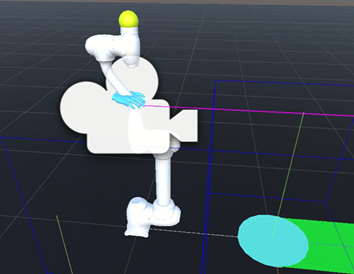

Sieun Lee (KAIST ME) has developed a program for a collaborative robot to align with a object using ArUco Marker. Knowing the position of the markers with a camera attached to the robot will be utilized to autonomous robots in machine tools: knowing the machine’s door and move a workpiece without any collisions. She implemented the application after she learned robot control using ROS (Robot Operating System) and image processing in OpenCV during the summer’s individual research, 2024.